Engineering challenges: Caching under high load

What’s wrong with this piece of code?

function get_foo() {

$cache_key = 'fookey';

$data = wp_cache_get( $cache_key );

if ( false === $data ) {

sleep( 10 );

$data = 'foodata';

wp_cache_add( $cache_key, $data, '', HOUR_IN_SECONDS );

}

return $data;

}

Under low server load, not much. A cache miss like this typically results in a single recalculation of data, with the majority of visitors getting cache hits when reaching that function.

However, under heavier loads (E.g.; 1,000+ requests per minute), data will be recalculated multiple, simultaneous times for each request. Before one request can be completed and stored in the cache, additional requests have come in, triggering duplicate calculations. This is known as cache stampeding, or the thundering herd problem.

If the data calculation requires calling a third-party service, as seen in the following example, at best the rate limit is reached, but at worst a denial of service takes down or severely restricts access to the API, setting off alarms for support engineers:

function get_foo() {

$cache_key = 'fookey';

$data = wp_cache_get( $cache_key );

if ( false === $data ) {

$response = wp_remote_get( 'http://example.com/some_api', array(

'timeout' => 10

) );

// For the sake of the example, we're skipping the part where we check for a 200 response.

$data = json_decode( $response['body'], true );

wp_cache_add( $cache_key, $data, '', HOUR_IN_SECONDS );

}

return $data;

}

This code might seem acceptable, given that the worst case scenario results in a visitor waiting ~10s+ for their request to process. This is true, under some scenarios.

When is it true?

Consider a hypothetical website that only calls get_foo() on its homepage; if the right kind of caching is in place, there is little to worry about.

By using a caching reverse proxy in front of the app server, which only allows one synchronous request per resource, along with stale caching data for handling other requests, there is no likely issue.

For those who wear a DevOps hat, you’ll probably recognize these two solutions:

Varnish + grace mode and NGINX + proxy_cache_use_stale.

However, in the case where get_foo() isn’t limited to the homepage, the caching reverse proxy won’t help. Instead, it will send one request through for each URL, resulting in an overwhelmed server.

Here are several solutions to the above problem, each of which can be applied on a case-by-case basis:

- Do nothing. This is a valid approach for the majority of sites on the internet. Unless serving more than a dozen requests a minute, the amount of visitors affected by this issue approaches zero.

- Out of band scheduled updating via WP-CLI.

get_foo();

/*

If it needs to get more complicated, you can also use wp_cron

(just remember the drawbacks here), or add time-based segregation in your script ( 0 === date('i') % $interval )

You modify get_foo to always recache when you call from WP_CLI.

*/

function get_foo() {

$cache_key = 'fookey';

$data = wp_cache_get( $cache_key );

if ( false === $data || defined( 'WP_CLI' ) ) {

sleep( 10 );

$data = 'foodata';

wp_cache_add( $cache_key, $data );

return $data;

}

}

- Out of band triggered request. Similar to above, this solution always serves cached data using TechCrunch WP Asynchronous Tasks (co-developed by 10up) to make a request without affecting the visitor experience.

- Mutex, which adds a second cache key—’fookey_updating’—when ‘fookey’ is not present or up to date. It either forces the other requests to sleep or to return a stale version of the data.

function get_foo() {

$cache_key = 'fookey';

$cache_key_stale = 'fookey_stale';

$cache_key_updating = 'fookey_updating';

$data = wp_cache_get( $cache_key );

if ( false === $data ) {

$is_updating = ( false !== wp_cache_get( $cache_key_updating );

$data = wp_cache_get( $cache_key_stale );

if ( ! $is_updating || false === $data ) {

wp_cache_add( $cache_key_updating, true, '', 30 ); // We give this entry an expire time so that the cache key is cleared if the request was terminated.

sleep( 10 );

$data = 'foodata';

wp_cache_add( $cache_key, $data, '', HOUR_IN_SECONDS );

wp_cache_replace( $cache_key_stale, $data );

wp_cache_delete( $cache_key_updating );

}

}

return $data;

}

- Re-caching, which works for a widget included on all site pages.

// example.com is a third-party site; 10upclient.com is the production site

function get_foo() {

$cache_key = 'fookey';

$data = wp_cache_get( $cache_key );

if ( false === $data ) {

$response = wp_remote_get( 'http://10upclient.com/wp-admin/admin_ajax.php?action=some_api', array( 'timeout' => 10 ) );

$data = json_decode( $response['body'], true );

wp_cache_add( $cache_key, $data, '', HOUR_IN_SECONDS );

}

return $data;

}

add_filter( 'wp_ajax_nopriv_some_api', function () {

$response = wp_remote_get( 'http://example.com/some_api', array( 'timeout' => 10 ) );

// For the sake of the example, we're skipping the part where we check for a 200 response.

$data = json_decode( $response['body'], true );

return $data;

} );

By forcing a bottleneck code to exist for http://10upclient.com/wp-admin/admin_ajax.php?action=some_api, Varnish/NGINX, stale content will be returned for that resource, and only one request will be allowed at a time to that endpoint.

- Edge Side Includes (ESI). Similar to #5, ESI dynamically generates the HTML for a widget from an endpoint. Added for posterity, ESI is not recommended for WordPress, as it requires a call through the full WordPress stack for each page section, potentially turning one request into many. WordPress isn’t architected to serve fragments of pages by default, though it can be customized to do so.

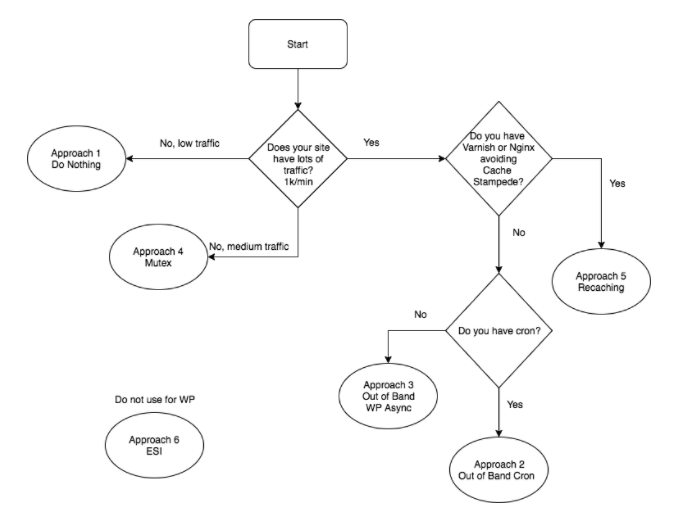

This flowchart can be used to identify the best solution for each use case. If you enjoy unpacking complex engineering challenges like these, consider applying to 10up.

Ivan Kruchkoff

Ivan Kruchkoff